On RL Stagnation

In Towards Deployable RL, the authors expressed concerns about stagnation in the field of reinforcement learning (RL) research. They identified five research practices causing the stagnation: overfitting to specific benchmarks, wrong focus, detached theory, uneven playing grounds, and lack of experimental rigour. As a solution, they advocate applying the “deployable RL” research framework, which focuses on solving challenges instead of beating benchmarks. This blog post is a response to the article.

Reasons for Stagnation

The authors conflate different problems and misattribute them to “RL stagnation.” The causes they have identified are all contributing factors but cannot explain RL stagnation.

-

Overfitting to specific benchmarks. If reducing a few decimal points in WER and CER for LLMs yields tangible values, then overfitting to RL benchmarks is not the main problem.

-

Wrong focus. Agree that the emphasis on sample efficiency in RL research needs to change. However, I argue that the most critical engineering consideration should be training efficiency measured by wall-clock time. Training efficiency (see blog post) is much more indicative of the impact of a research paper.

-

Uneven playing grounds and lack of experimental rigour are contributing factors. However, they apply as much to other fields as they do in RL.

Indeed, it is more difficult to start researching RL methods because, unlike supervised learning, it often requires designing the environment (e.g., brachiation) in addition to the algorithm. The diversity of RL applications creates the impression that RL is moving slower than supervised learning. But the reality is that RL has covered a broader problem space. Bipedal locomotion, quadrupedal locomotion, and brachiation are distinctly unique. Not to mention chess/Go, StarCraft/Dota, matrix multiplication, and chip layout.

Another problem with the analysis is that the authors conflated RL and its applications, like robotics. RL should be compared to supervised learning, not CV or NLP. People don’t say supervised learning is stagnant, even though most people are still using Adam or SGD. It is important to make clear the difference between RL and applications.

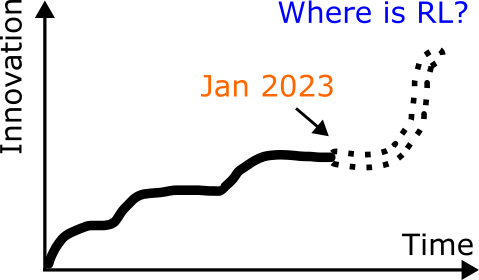

Has RL research really stagnated?

RL, and more specifically its applications, feel stagnant because people subconsciously evaluate them with a higher standard.

In supervised learning, reaching human-level performance is the ceiling. Objectively, ChatGPT and LLMs speak at an average human level, solve grade-level math problems, and have a basic understanding of a wide range of topics. Similarly, for Stable Diffusion and the likes, their drawing ability is above average human but still comparable to professional artists.

With RL, AlphaGo Zero 2017 and AlphaTensor 2022 (fast matrix multiplication) have expanded our knowledge about the world. In 2021, RL was used to design chips at an objectively comparable or superior level to human experts. In each case, RL was used to discover things that no human had previously known, regardless of how niche the application is. Discoveries don’t happen every day. So it is normal to feel, from time to time, that RL and its related applications are stagnant.

The Real Problems (What does “RL Stagnation” mean?)

What the authors, and many others, meant by “RL stagnation” is a combination of:

-

Improvements are not fundamental. Compared to supervised learning, RL algorithms converge slower and often to local optima. RL algorithms cannot be generally applied, e.g., different algorithms perform better or worse on different problems. While this is a valid criticism, it is not a reason to stop fundamental research (“RL first”) on RL algorithms.

-

Popular benchmarks do not include “real” problems. Another problem, which the authors implied, is the lack of investment from large corporations and startups. The question should be why aren’t more companies putting out prizes for challenges they want to be solved. In robotics, giving away robots for free is clearly not economically viable. For medicine and others, presumably holding onto training data or simulators is more valuable than solving challenges quicker.

-

RL applications have not provided tangible value. RL has been successfully applied to games, math, engineering, and probably more. It is easy to dismiss the progress because these applications are not as visible as CV and NLP applications.

The Solution

-

Acknowledge that RL is hard. It is one thing to solve a problem with a known solution (supervised learning); it is another when no prior solution is known (RL). Progress is being made, but it is unreasonable to expect breakthroughs every year.

-

Acknowledge that RL applications are niche. Despite all that RL has accomplished, people still believe that RL has stagnated. In the Go community, many professional players use FineArt to train and review; and many amateur players use KataGo as part of their training routine. However, the average person is not making their own chips at home or trying to optimize matrix multiplication. RL applications are niche, and that is ok.

-

Focus on time efficiency. Time efficiency is (1) if an algorithm can be implemented efficiently and (2) if an algorithm can scale with more hardware resources. Sample efficiency is a convenient way to compare algorithms across different weight classes. But ultimately, time efficiency is the common scaling factor for accelerating research.

-

Lower entry barrier. Open-source everything—code and pretrained models. Make your algorithm, environment, and models available, so people will build on top of them.

-

Build demos and products. The best way to convince other people that your model works is by letting them use it. Tools like StreamLit and Hugging Face Space make building demos easy. For Go, there is KaTrain, AI Sensei, and other easy-to-use training companions. For physics-based character animation, we should move towards building web demos using JavaScript, e.g., ammo.js, JoltPhysics, and Rapier.

In conclusion, I believe RL has made and is making steady progress. It is worthwhile to recognize that RL is hard and RL applications are niche. The lack of media coverage around successful RL applications, at least compared to Stable Diffusion or ChatGPT, creates the impression that RL is stagnant. However, it is not a reason to stop fundamental research on RL algorithms, nor is it a reason to focus on only applications that creates tangible values Future algorithm research should focus on time efficiency instead of sample efficiency. Faster iterations is the key to enabling broader adoption.